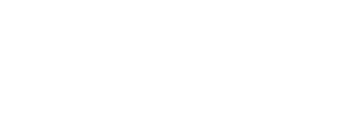

网络设置

首先在本地安装VirtualBox,然后添加CentOS虚拟机,都是常规安装,这里要稍加强调的是网络设置。打开对应机器的Settings>Network,然后配置网卡适配器为Bridged Adapter,Alan使用的是Mac采用无线上网,所以选择en0: Wi-Fi(Airport),另外请注意勾选下面的Cable Connected

vi /etc/sysconfig/network-scripts/ifcfg-eth0进行网卡的相关配置,主要修改如下设置(这里动态获取IP)

ONBOOT=yes NM_CONTROLLED=no BOOTPROTO=dhcp

可通过ifconfig eth0查看连接情况

安装JDK

下载jdk并解压

cd/opt/ yum install wget -y wget --no-cookies --no-check-certificate --header "Cookie: gpw_e24=http%3A%2F%2Fwww.oracle.com%2F; oraclelicense=accept-securebackup-cookie" "http://download.oracle.com/otn-pub/java/jdk/8u66-b17/jdk-8u66-linux-x64.tar.gz" tar xzvf jdk-8u66-linux-x64.tar.gz

使用alternatives命令来进行安装

cd /opt/jdk1.8.0_66/ alternatives --install /usr/bin/java java /opt/jdk1.8.0_66/bin/java 2 alternatives --config java

选择/opt/jdk1.8.0_66/bin/java完成安装,通过如下指令来安装jar和javac

alternatives --install /usr/bin/jar jar /opt/jdk1.8.0_66/bin/jar 2 alternatives --install /usr/bin/javac javac /opt/jdk1.8.0_66/bin/javac 2 alternatives --set jar /opt/jdk1.8.0_66/bin/jar alternatives --set javac /opt/jdk1.8.0_66/bin/javac

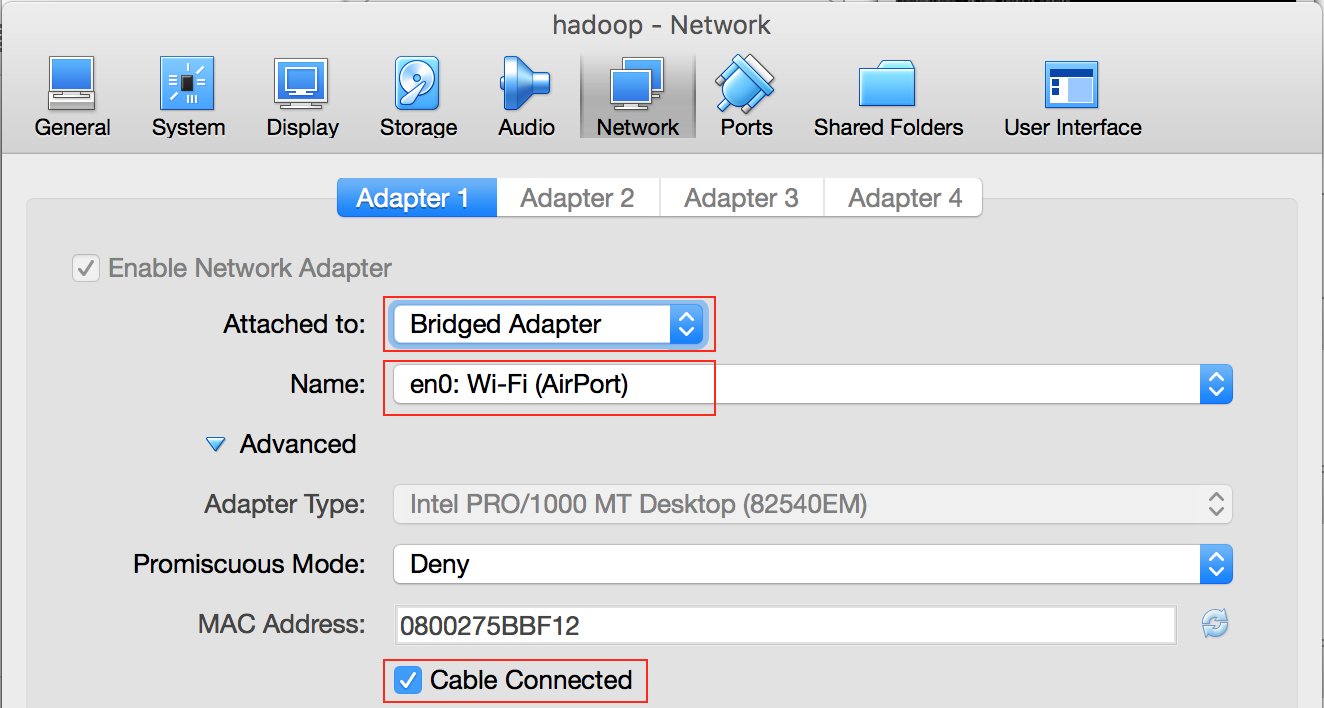

查看Java版本确认安装是否成功

[root@hadoop jdk1.8.0_66]# java -version java version "1.8.0_66" Java(TM) SE Runtime Environment (build 1.8.0_66-b17) Java HotSpot(TM) 64-Bit Server VM (build 25.66-b17, mixed mode)

设置JAVA_HOME和JRE_HOME变量

export JAVA_HOME=/opt/jdk1.8.0_66 export JRE_HOME=/opt/jdk1.8.0_66/jre

添加环境变量到PATH中

export PATH=$PATH:/opt/jdk1.8.0_66/bin:/opt/jdk1.8.0_66/jre/bin

若要使这一环境变量永久生效,可执行vi /etc/profile,并在最后添加

PATH=/opt/jdk1.8.0_66/bin:/opt/jdk1.8.0_66/jre/bin:$PATH export PATH

执行source /etc/profile即可生效

安装Hadoop

首先添加一个用户hadoop并设置密码

adduser hadoop passwd hadoop

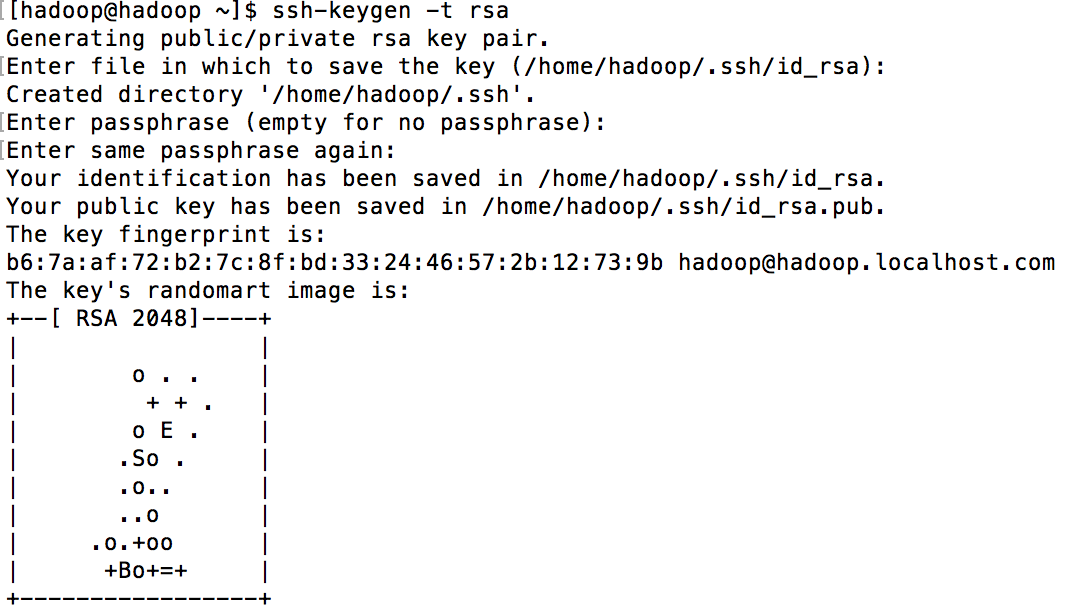

接下来切换到用户hadoop并创建一个带密钥的ssh连接

su - hadoop ssh-keygen -t rsa cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys chmod 0600 ~/.ssh/authorized_keys

[hadoop@hadoop ~]$ ssh localhost The authenticity of host 'localhost (::1)' can't be established. RSA key fingerprint is dd:82:21:61:3a:c2:be:f6:94:ff:e6:2c:82:47:1c:69. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'localhost' (RSA) to the list of known hosts. [hadoop@hadoop ~]$ exit

接下来下载Hadoop并解压到home目录下

cd ~ wget http://apache.opencas.org/hadoop/common/hadoop-2.6.0/hadoop-2.6.0.tar.gz tar xzvf hadoop-2.6.0.tar.gz mv hadoop-2.6.0 hadoop

执行vi ~/.bashrc在最后面添加如下Hadoop环境变量

export HADOOP_HOME=/home/hadoop/hadoop export HADOOP_INSTALL=$HADOOP_HOME export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export YARN_HOME=$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

执行source ~/.bashrc来应用修改的配置,同样可以使用echo $PATH来进行查看

vi /home/hadoop/hadoop/etc/hadoop/hadoop-env.sh修改JAVA_HOME环境变量如下:

export JAVA_HOME=/opt/jdk1.8.0_66/

Hadoop下的配置文件较多,我们先针对基本的Hadoop单节点集群进行配置,切换到目录:cd /home/hadoop/hadoop/etc/hadoop/

编辑core-site.xml,添加如下配置

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

编辑hdfs-site.xml文件,添加如下配置

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>file:///home/hadoop/hadoopdata/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:///home/hadoop/hadoopdata/hdfs/datanode</value>

</property>

</configuration>

编辑yarn-site.xml文件,添加如下配置

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

编辑mapred-site.xml文件

cp mapred-site.xml.template mapred-site.xml vi mapred-site.xml

添加如下配置

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

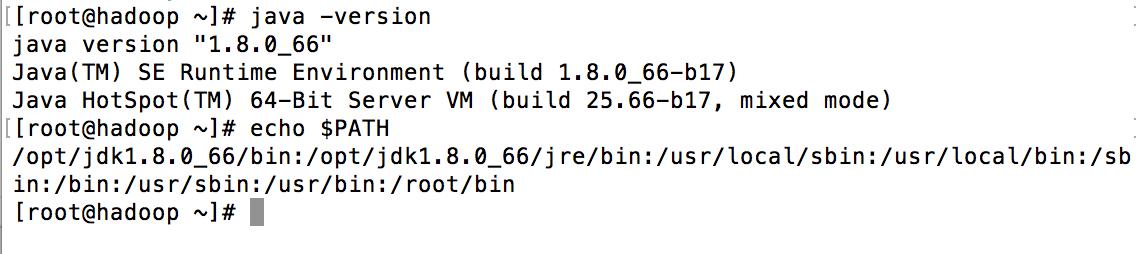

cd ~,执行hdfs namenode -format命令格式化Namenode,切换到sbin目录(cd /home/hadoop/hadoop/sbin/),然后执行

start-dfs.sh和start-yarn.sh。此时执行jps

[hadoop@hadoop ~]$ jps 1808 SecondaryNameNode 1445 NameNode 3274 Jps 3194 NodeManager 3099 ResourceManager

Hadoop NameNode的默认端口是50070,此时可尝试在浏览器中进行访问,如http://192.168.1.109:50070/(通过ifconfig可查看当前的IP)

很多时候我们会同时为服务器配上一个域名,在本地要使其生效则需修改本地电脑的hosts文件,以Mac为例修改/private/etc/hosts文件(注:在terminal中操作需使用sudo su切换到root账号)

192.168.1.109 master.hadoop.com

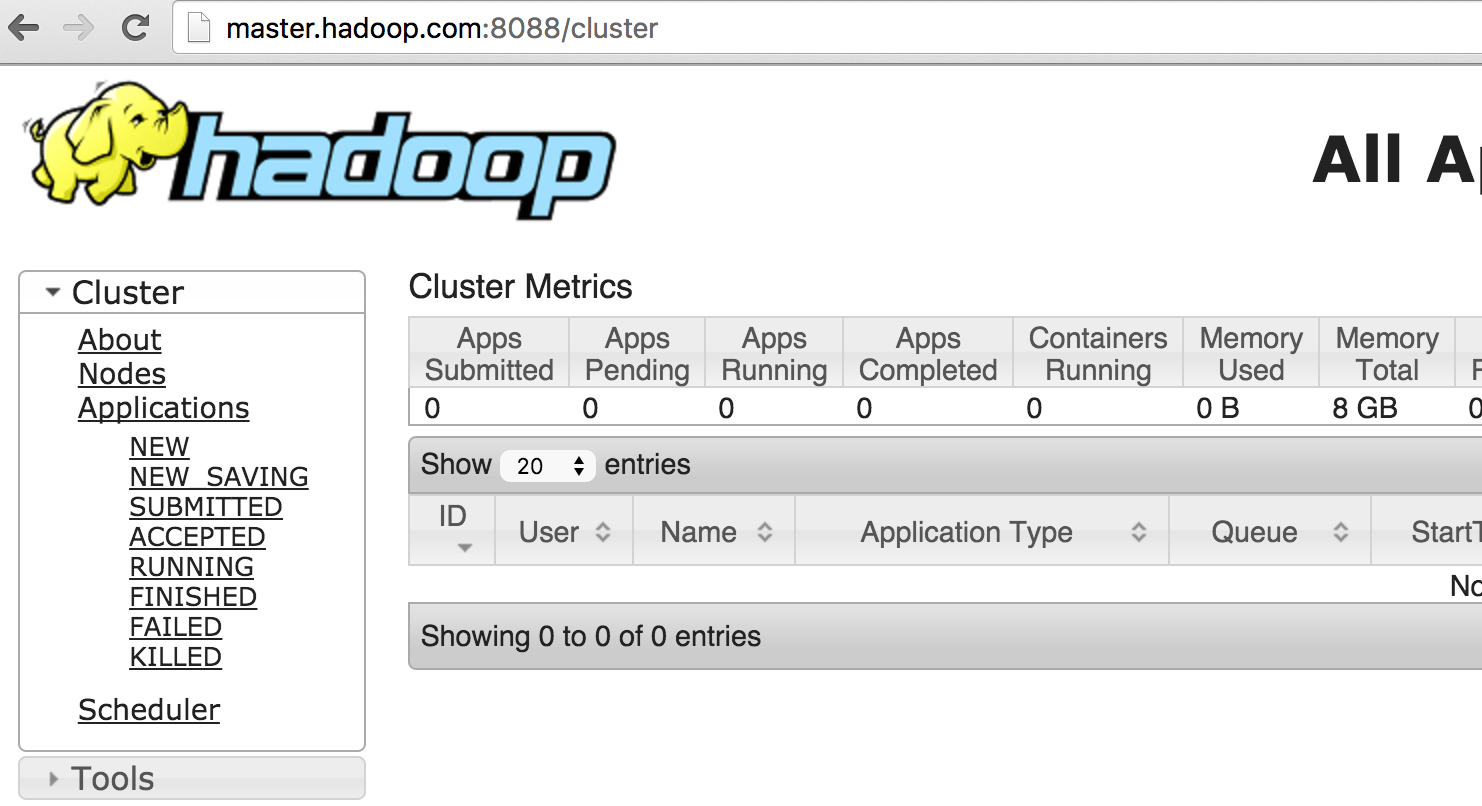

此时我们访问http://master.hadoop.com:8088/来查看集群和所有应用的信息

安装Hive

cd /home/hadoop wget http://apache.opencas.org/hive/hive-1.2.1/apache-hive-1.2.1-bin.tar.gz tar xzf apache-hive-1.2.1-bin.tar.gz mv apache-hive-1.2.1-bin hive chown -R hadoop hive

设置环境变量

su - hadoop export HADOOP_PREFIX=/home/hadoop/hadoop export HIVE_HOME=/home/hadoop/hive export PATH=$HIVE_HOME/bin:$PATH

启动Hive

在启动Hive前需要做一些准备工作,创建 /tmp和/user/hive/warehouse目录并赋予g+w权限

cd /home/hadoop/hive hadoop fs -mkdir /tmp hadoop fs -mkdir /user/hive/warehouse hadoop fs -chmod g+w /tmp hadoop fs -chmod g+w /user/hive/warehouse

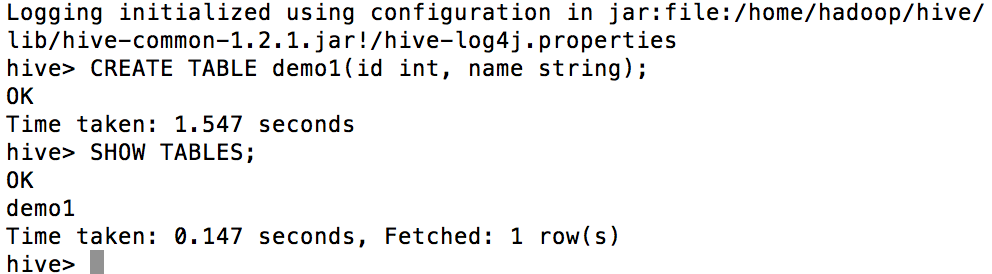

完成上述设置后输入bin/hive启动并尝试创建数据表进行测试

安装Hbase

cd ~

wget http://apache.opencas.org/hbase/stable/hbase-1.1.2-bin.tar.gz

tar -xzvf hbase-1.1.2-bin.tar.gz

mv hbase-1.1.2 HBase

vi HBase/conf/hbase-env.sh

export JAVA_HOME=/opt/jdk1.8.0_66/

vi HBase/conf/hbase-site.xml

<configuration>

//Here you have to set the path where you want HBase to store its files.

<property>

<name>hbase.rootdir</name>

<value>file:/home/hadoop/HBase/HFiles</value>

</property>

//Here you have to set the path where you want HBase to store its built in zookeeper files.

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/home/hadoop/zookeeper</value>

</property>

</configuration>

HBase/bin/start-hbase.sh

正常的话显示:

starting master, logging to /home/hadoop/HBase/bin/../logs/hbase-hadoop-master-hadoop.localhost.com.out

关闭服务

HBase/bin/stop-hbase.sh

vi HBase/conf/hbase-site.xml

添加

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

并进行如下修改

<property>

<name>hbase.rootdir</name>

<value>hdfs://localhost:8030/hbase</value>

</property>

HBase/bin/start-hbase.sh

常见错误

1.出现WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable报错

这是由于系统是64位,而hadoop按照32位进行编译,

cd /home/hadoop/

wget http://dl.bintray.com/sequenceiq/sequenceiq-bin/hadoop-native-64-2.6.0.tar

tar -xvf hadoop-native-64-2.6.0.tar -C hadoop/lib

vi .bashrc,加入如下内容

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS=”-Djava.library.path=$HADOOP_HOME/lib”

source .bashrc让环境变量生效,此时会发现错误消失

TODO:尝试自己编译一下64位hadoop,参考链接http://kiwenlau.blogspot.com/2015/05/steps-to-compile-64-bit-hadoop-230.html

2.安装Hive出现[ERROR] Terminal initialization failed; falling back to unsupported

java.lang.IncompatibleClassChangeError: Found class jline.Terminal, but interface was expected报错

vi .bashrc加入

export HADOOP_USER_CLASSPATH_FIRST=true

source .bashrc使其生效

3.ERROR [main] client.ConnectionManager$HConnectionImplementation: Can’t get connection to ZooKeeper报错

请确保在执行./bin/hbase shell之前执行 ./bin/start-hbase.sh