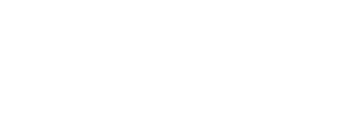

MMM(Multi-Master Replication Manager)

监控和管理MySQL的主主复制拓扑:

MMM部署步骤

1.配置主主复制及主从同步集群

更详细配置方法可参见MySQL主从复制一文

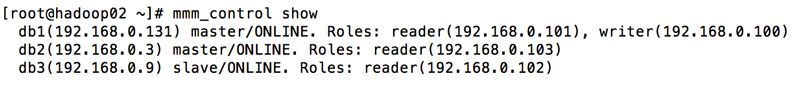

主1:192.168.0.131

主2:192.168.0.3

从1:192.168.0.9(本例仅使用一台从服务器,多台配置方法相同)

虚拟IP:192.168.0.100(写),192.168.0.101、192.168.0.102、192.168.0.103(读)

#主1 CREATE USER repl@'192.168.0.%' IDENTIFIED BY '123456'; GRANT REPLICATION SLAVE ON *.* TO repl@'192.168.0.%'; #my.cnf log-bin=mysql-bin max_binlog_size = 1000M binlog_format =row expire_logs_days = 7 sync_binlog = 1 server-id=1 relay_log=/var/lib/mysql/mysqld-relay-bin #导出 mysqldump --single-transaction --master-data=2 --all-databases --triggers --routines --events -uroot -p > all.sql scp all.sql root@192.168.0.3:/root #导出到主1 scp all.sql root@193.168.0.9:/root #导出到从1 #主2 #my.cnf log-bin=mysql-bin max_binlog_size = 1000M binlog_format =row expire_logs_days = 7 sync_binlog = 1 server-id=2 relay_log=/var/lib/mysql/mysqld-relay-bin #导入 mysql -uroot -p < all.sql #以下配置的后两项查看all.sql中对应内容 CHANGE MASTER TO MASTER_HOST = '192.168.0.131', MASTER_USER = 'repl', MASTER_PASSWORD = '123456', MASTER_LOG_FILE='mysql-bin.000004', MASTER_LOG_POS=194; start slave; #启动从库 #回到主1 CHANGE MASTER TO MASTER_HOST = '192.168.0.3', MASTER_USER = 'repl', MASTER_PASSWORD = '123456', MASTER_LOG_FILE='mysql-bin.000004', MASTER_LOG_POS=752920; #从1 #my.cnf log-bin=mysql-bin max_binlog_size = 1000M binlog_format =row expire_logs_days = 7 sync_binlog = 1 server-id=2 relay_log=/var/lib/mysql/mysqld-relay-bin #导入 mysql -uroot -p < all.sql #以下配置的后两项查看all.sql中对应内容,后两项的值请在主2上执行show master status来获取 CHANGE MASTER TO MASTER_HOST = '192.168.0.131', MASTER_USER = 'repl', MASTER_PASSWORD = '123456', MASTER_LOG_FILE='mysql-bin.000004', MASTER_LOG_POS=194; start slave; #启动从库

2.安装主从节点所需要的支持包

主从服务器所需进行的安装:

wget https://dl.fedoraproject.org/pub/epel/epel-release-latest-6.noarch.rpm wget http://rpms.famillecollet.com/enterprise/remi-release-6.rpm rpm -ivh epel-release-latest-6.noarch.rpm rpm -ivh remi-release-6.rpm vi /etc/yum.repos.d/epel.repo baseurl #取消注释 #mirrorlist #进行注释 vi /etc/yum.repos.d/remi.repo enabled=1 #将0修改为1

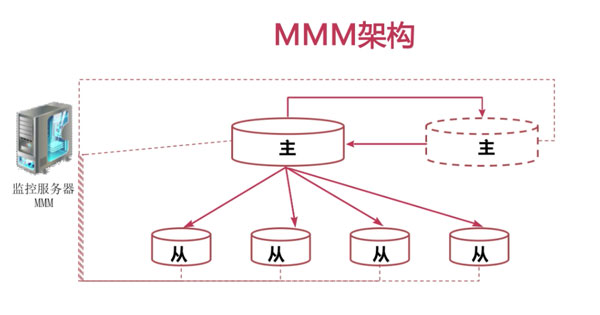

可通过yum search mmm查看MMM的包

主从同时安装mysql-mmm-agent.noarch:

yum install mysql-mmm-agent.noarch -y

#安装监控包,本例从服务器同时充当监控服务器

yum install mysql-mmm* -y

3.安装及配置MMM工具集

复制所需账号在配置过程中已经建立(repl),还需要两个账号,只需要在主1上进行操作即可:

grant replication client on *.* to 'mmm_monitor'@'192.168.0.%' identified by '123456'; #监控 grant super, replication client, process on *.* to 'mmm_agent'@'192.168.0.%' identified by '123456'; #代理

基它配置

#主1上进行如下配置

vi /etc/mysql-mmm/mmm_common.conf

replication_user repl

replication_password 123456

agent_user mmm_agent

agent_password 123456

<host db1>

ip 192.168.0.131

mode master

peer db2

</host>

<host db2>

ip 192.168.0.3

mode master

peer db1

</host>

<host db3>

ip 192.168.0.9

mode slave

</host>

<role writer>

hosts db1, db2

ips 192.168.0.100 #虚拟IP

mode exclusive

</role>

<role reader>

hosts db1, db2, db3

ips 192.168.0.101,192.168.0.102,192.168.0.103 #虚拟IP

mode balanced

</role>

#scp将配置拷贝到其它服务器上

scp /etc/mysql-mmm/mmm_common.conf root@192.168.0.3:/etc/mysql-mmm/

scp /etc/mysql-mmm/mmm_common.conf root@192.168.0.9:/etc/mysql-mmm/

然后将另两台服务器的this db1分别修改为this db2和this db3(/etc/mysql-mmm/mmm_agent.conf)

以上就完成了所有数据库节点的配置,接下来配置监控节点(从服务器)

vi /etc/mysql-mmm/mmm_mon.conf

ping_ips 192.168.0.131,192.168.0.3,192.168.0.9

monitor_password 123456

启动各节点的代理服务

/etc/init.d/mysql-mmm-agent start

4.运行MMM监控服务

在监控节点执行

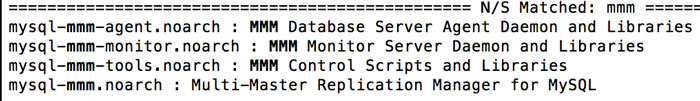

/etc/init.d/mysql-mmm-monitor start mmm_control show

5.测试

此时如果我们关闭主1上的MySQL服务来模拟故障(/etc/init.d/mysqld stop),再次在监控节点上执行mmm_control show会发现主服务器已进行了自动切换

注:如果主1服务恢得正常那么状态就会由master/HARD_OFFLINE变为master/AWAITING_RECOVERY

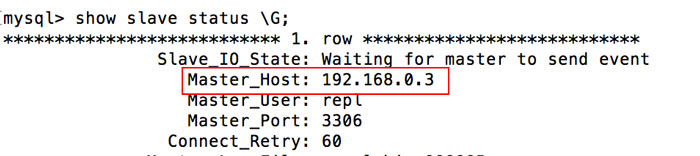

此时打开从库MySQL命令行执行show slave status \G;会发现虽然配置时只将主服务器配置为主1,却切换成了主2