这是一个最近两天 GitHub 上 Trending 的一个项目,是一个深度集成了Python编程语言的网页端电子表格应用。

它提供集成了加载、清理、操作和可视化数据的工作流。通过使用 Go 语言编写的电子表格后端并集成了Python运行时来操作其内容。因此它可充分地利用了强大的 Python库如 Pandas, Numpy等来进行数据分析,同时又结合了 Excel 的强大特性。

项目地址:https://github.com/ricklamers/gridstudio

测试环境:Ubuntu 16.04

|

1 2 3 4 5 6 7 8 |

# 要求安装 Docker,如未安装,使用 curl -sSL https://get.docker.com/ | sh # 使用 xxx 用户运行 Docker sudo usermod -aG docker xxx sudo systemctl start docker # 安装&运行 git clone https://github.com/ricklamers/gridstudio cd gridstudio && sudo ./run.sh |

此时访问http://127.0.0.1:8080或http://your.ip.addr:8080;默认用户名/密码均为 admin

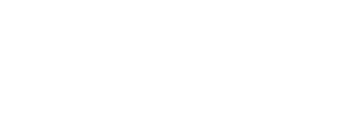

示例效果:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

import numpy as np import pandas as pd import math import time n = 10000 for i in range(9): sample_n = 2**i * 40 print(sample_n) normally_dist = np.random.randn(sample_n) rep_count = math.ceil(n / sample_n) normally_dist = np.repeat(normally_dist, rep_count) # limit normally_dist = normally_dist[0:n] sheet("A1", pd.DataFrame(normally_dist)) time.sleep(1) |

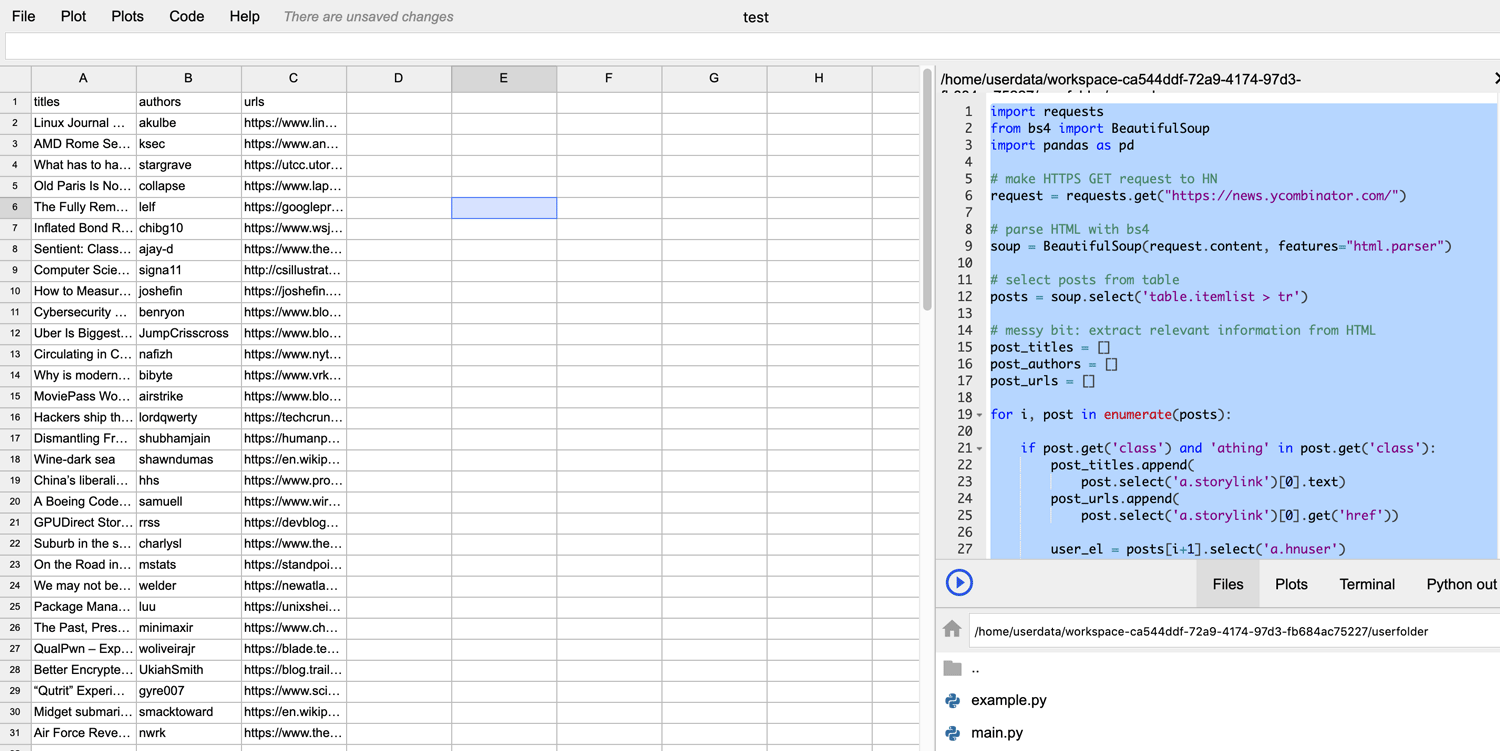

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

import requests from bs4 import BeautifulSoup import pandas as pd # make HTTPS GET request to HN request = requests.get("https://news.ycombinator.com/") # parse HTML with bs4 soup = BeautifulSoup(request.content, features="html.parser") # select posts from table posts = soup.select('table.itemlist > tr') # messy bit: extract relevant information from HTML post_titles = [] post_authors = [] post_urls = [] for i, post in enumerate(posts): if post.get('class') and 'athing' in post.get('class'): post_titles.append( post.select('a.storylink')[0].text) post_urls.append( post.select('a.storylink')[0].get('href')) user_el = posts[i+1].select('a.hnuser') if len(user_el) > 0: post_authors.append(user_el[0].text) else: post_authors.append('No author') # construct dataframe from lists df = pd.DataFrame({ "titles": post_titles, "authors": post_authors, "urls": post_urls}) # write dataframe to sheet starting at A1 position sheet("A1", df, headers=True) |