# https://www.crummy.com/software/BeautifulSoup/bs4/doc/#quick-start

from urllib.request import urlopen

from bs4 import BeautifulSoup

import re

html_doc = """

<html><head><title>The Dormouse's story</title></head>

<body>

<b>The Dormouse's story</b>

Once upon a time there were three little sisters; and their names were

<a href="http://example.com/elsie" class="sister" id="link1">Elsie</a>,

<a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and

<a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>;

and they lived at the bottom of a well.

...

"""

soup = bs(html_doc, 'html.parser')

# print(soup.prettify())

# print(soup.title)

# string和get_text的功能大体相当

# print(soup.title.string)

# print(soup.title.get_text())

# 打印出父标签名

# print(soup.p.parent.name)

# print(soup.findAll('a'))

# 通过id查找内容

# print(soup.find(id='link1').string)

#打印出所有标签的内容

# for link in soup.find_all('a'):

# print(link.get_text())

#通过标签名和类名获取赔偿额

# print(soup.find('p',{'class', 'story'}))

# print(soup.find('p',{'class', 'story'}).get_text())

# print(soup.find_all("p", class_="story"))

#抓取网页内容

# resp = urlopen('http://www.baidu.com')

# baidu = bs(resp, 'html.parser')

# print(baidu.prettify())

#打印出以b开头的标签名

for tag in soup.find_all(re.compile("^b")):

print(tag.name)

#打印出href以http://example.com的a标签

print(soup.findAll('a', href=re.compile(r"^http://example\.com")))

抓取Wiki中的链接和文本并存入MySQL数据库(PyMySQL)

import pymysql.cursors

from urllib.request import urlopen

from bs4 import BeautifulSoup

import re

resp = urlopen("https://en.wikipedia.org/wiki/Main_Page")

soup = BeautifulSoup(resp, 'html.parser')

# print (soup)

listUrls = soup.findAll('a', href=re.compile('^/wiki/'))

# print(listUrls)

for url in listUrls:

# print(url.get_text())

if not re.search('\.(jpg|JPG)', url["href"]):

print(url.get_text(), ' : ', 'https://en.wikipedia.org' + url["href"])

# Connect to the database

connection = pymysql.connect(host='localhost',

user='root',

password='',

db='wikiUrls',

charset='utf8mb4',

cursorclass=pymysql.cursors.DictCursor)

try:

with connection.cursor() as cursor:

sql = 'insert into <code>url</code> (<code>url</code>, <code>href</code>) values(%s, %s)'

cursor.execute(sql, (url.get_text(), 'https://en.wikipedia.org' + url["href"]))

connection.commit()

finally:

connection.close()

读取txt文件

from urllib.request import urlopen

text = urlopen('https://en.wikipedia.org/robots.txt')

print(text.read().decode('utf-8'))

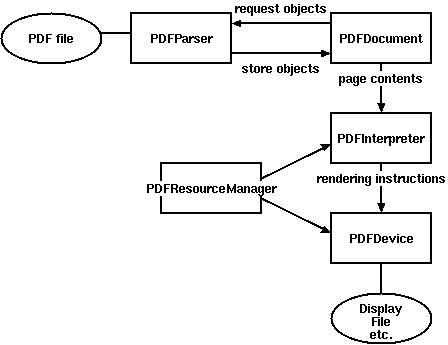

读取pdf文件

https://pypi.python.org/pypi/pdfminer3k

# pip install pdfminer3k

from pdfminer.converter import PDFPageAggregator

from pdfminer.layout import LAParams

from pdfminer.pdfparser import PDFParser, PDFDocument

from pdfminer.pdfinterp import PDFResourceManager, PDFPageInterpreter

from pdfminer.pdfdevice import PDFDevice

# 获取文档对象

fp = open('media/naacl06-shinyama.pdf', 'rb')

# 创建一个与文档相关的解释器

parser = PDFParser(fp)

# 创建PDF文档对象

doc = PDFDocument()

# 连接解释器和文档对象

parser.set_document(doc)

doc.set_parser(parser)

# 初始化文档

doc.initialize('')

# 创建PDF资源管理器

resource = PDFResourceManager()

# 创建参数分析器

laparam = LAParams()

# 创建一个聚合器

device = PDFPageAggregator(resource, laparams=laparam)

# 创建页面解释器

interpreter = PDFPageInterpreter(resource, device)

# 使用文档对象得到页面的内容

for page in doc.get_pages():

# 页面解释器来读取

interpreter.process_page(page)

# 使用聚合器来获得内容

layout = device.get_result()

for out in layout:

if hasattr(out, 'get_text'):

print(out.get_text())

慕课网学习笔记